Red Hat OpenShift v1

EDB Postgres Distributed for Kubernetes is a certified operator that can be installed on OpenShift using a web interface.

Ensuring access to EDB private registry

Important

You need access to the private EDB repository where both the operator and operand images are stored. Access requires a valid EDB subscription plan. See Accessing EDB private image registries for details.

The OpenShift install uses pull secrets to access the operand and operator images, which are held in a private repository.

Once you have credentials to the private repo, you need to create

two pull secrets in the openshift-operators namespace:

pgd-operator-pull-secretfor the EDB Postgres Distributed for Kubernetes operator imagespostgresql-operator-pull-secretfor the EDB Postgres for Kubernetes operator images

You can create each secret using the oc create command:

oc create secret docker-registry pgd-operator-pull-secret \ -n openshift-operators --docker-server=docker.enterprisedb.com \ --docker-username="@@REPOSITORY@@" \ --docker-password="@@TOKEN@@" oc create secret docker-registry postgresql-operator-pull-secret \ -n openshift-operators --docker-server=docker.enterprisedb.com \ --docker-username="@@REPOSITORY@@" \ --docker-password="@@TOKEN@@"

Where:

@@REPOSITORY@@is the name of the repository, as explained in Which repository to choose?.@@TOKEN@@is the repository token for your EDB account, as explained in How to retrieve the token.

Installing the operator

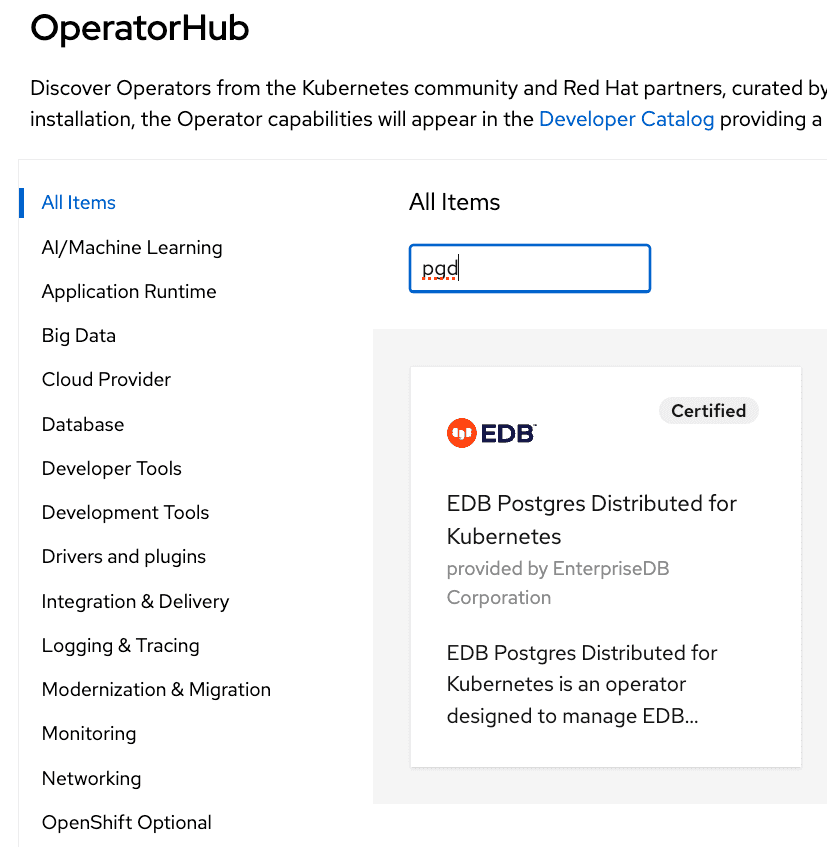

The EDB Postgres Distributed for Kubernetes operator can be found in the Red Hat OperatorHub directly from your OpenShift dashboard.

From the hamburger menu, select Operators > OperatorHub.

In the web console, use the search box to filter the listing. For example, enter

EDBorpgd:

Read the information about the operator and select Install.

In the Operator Installation page, select:

- The installation mode. Cluster-wide is currently the only mode.

- The update channel (currently preview).

- The approval strategy, following the availability on the marketplace of

a new release of the operator, certified by Red Hat:

- Automatic: OLM upgrades the running operator with the new version.

- Manual: OpenShift waits for human intervention by requiring an approval in the Installed Operators section.

Cluster-wide installation

With cluster-wide installation, you're asking OpenShift to install the

operator in the default openshift-operators namespace and to make it

available to all the projects in the cluster.

This is the default and normally recommended approach to install EDB Postgres

Distributed for Kubernetes.

From the web console, for Installation mode, select All namespaces on the cluster (default).

On installation, the operator is visible in all namespaces. In case there

were problems during installation, check the logs in any pods in the

openshift-operators project on the Workloads > Pods page

as you would with any other OpenShift operator.

Beware

By choosing the cluster-wide installation you, can't easily move to a single-project installation later.

Creating a PGD cluster

After the installation by OpenShift, the operator deployment

is in the openshift-operators namespace. Notice the cert-manager operator was

also installed, as was the EDB Postgres for Kubernetes operator

(postgresql-operator-controller-manager).

$ oc get deployments -n openshift-operators NAME READY UP-TO-DATE AVAILABLE AGE cert-manager-operator 1/1 1 1 11m pgd-operator-controller-manager 1/1 1 1 11m postgresql-operator-controller-manager-1-20-0 1/1 1 1 23h …

After checking that the pgd-operator-controller-manager deployment is READY, you can

start creating PGD clusters. The EDB Postgres Distributed for Kubernetes

repository contains some useful sample files.

You must deploy your PGD clusters on a dedicated namespace/project. The default namespace is reserved.

First, then, create a new namespace, and deploy a

self-signed certificate Issuer

in it:

oc create ns my-namespace oc apply -n my-namespace -f \ https://raw.githubusercontent.com/EnterpriseDB/edb-postgres-for-kubernetes-charts/main/hack/samples/issuer-selfsigned.yaml

Using PGD in a single OpenShift cluster in a single region

Now you can deploy a PGD cluster, for example a flexible 3-region, which

contains two data groups and a witness group. You can find the YAML manifest

in the file flexible_3regions.yaml.

oc apply -f flexible_3regions.yaml -n my-namespace

Your PGD groups start to come up:

$ oc get pgdgroups -n my-namespace NAME DATA INSTANCES WITNESS INSTANCES PHASE PHASE DETAILS AGE region-a 2 1 PGDGroup - Healthy 23m region-b 2 1 PGDGroup - Healthy 23m region-c 0 1 PGDGroup - Healthy 23m

Using PGD in multiple OpenShift clusters in multiple regions

To deploy PGD in multiple OpenShift clusters in multiple regions, you must first establish a way for the PGD groups to communicate with each other. The recommended way of achieving this with multiple OpenShift clusters is to use Submariner. Configuring the connectivity is outside the scope of this documentation. However, once you've established connectivity between the OpenShift clusters, you can deploy PGD groups synced with one another.

Warning

This example assumes you're deploying three PGD groups, one in each OpenShift cluster, and that you established connectivity between the OpenShift clusters using Submariner.

Similar to the single-cluster example, this example creates two data PGD groups and one witness group. In contrast to that example, each group lives in a different OpenShift cluster.

In addition to basic connectivity between the OpenShift clusters, you need to ensure that each OpenShift cluster contains a certificate authority that's trusted by the other OpenShift clusters. This condition is required for the PGD groups to communicate with each other.

The OpenShift clusters can all use the same certificate authority, or each cluster can have its own certificate authority. Either way, you need to ensure that each OpenShift cluster's certificates trust the other OpenShift clusters' certificate authorities.

This example uses a self-signed certificate that has a single certificate authority used for all certificates on all the OpenShift clusters.

The example refers to the OpenShift clusters as OpenShift Cluster A, OpenShift Cluster B, and

OpenShift Cluster C. In OpenShift, an installation of the EDB Postgres Distributed for Kubernetes operator from OperatorHub includes an

installation of the cert-manager operator. We recommend creating and managing certificates with cert-manager.

- Create a namespace to hold

OpenShift Cluster A, and in it also create the needed objects for a self-signed certificate. Assuming that the PGD operator and the cert-manager are installed, you create a self-signed certificateIssuerin that namespace.

oc create ns pgd-group oc apply -n pgd-group -f \ https://raw.githubusercontent.com/EnterpriseDB/edb-postgres-for-kubernetes-charts/main/hack/samples/issuer-selfsigned.yaml

- After a few moments, cert-manager creates the issuers and certificates. There are also now

two secrets in the

pgd-groupnamespace:server-ca-key-pairandclient-ca-key-pair. These secrets contain the certificates and private keys for the server and client certificate authorities. You need to copy these secrets to the other OpenShift clusters before applying theissuer-selfsigned.yamlmanifest. You can use theoc get secretcommand to get the contents of the secrets:

oc get secret server-ca-key-pair -n pgd-group -o yaml > server-ca-key-pair.yaml oc get secret client-ca-key-pair -n pgd-group -o yaml > client-ca-key-pair.yaml

- After removing the content specific to

OpenShift Cluster Afrom these secrets (such as uid, resourceVersion, and timestamp), you can switch context toOpenShift Cluster B. Then create the namespace, create the secrets in it, and only then apply theissuer-selfsigned.yamlfile:

oc create ns pgd-group oc apply -n pgd-group -f server-ca-key-pair.yaml oc apply -n pgd-group -f client-ca-key-pair.yaml oc apply -n pgd-group -f \ https://raw.githubusercontent.com/EnterpriseDB/edb-postgres-for-kubernetes-charts/main/hack/samples/issuer-selfsigned.yaml

- You can switch context to

OpenShift Cluster Cand repeat the same process followed for Cluster B:

oc create ns pgd-group oc apply -n pgd-group -f server-ca-key-pair.yaml oc apply -n pgd-group -f client-ca-key-pair.yaml oc apply -n pgd-group -f \ https://raw.githubusercontent.com/EnterpriseDB/edb-postgres-for-kubernetes-charts/main/hack/samples/issuer-selfsigned.yaml

- On

OpenShift Cluster A, you can create your first PGD group, calledregion-a. The YAML manifest for the PGD group is:

apiVersion: pgd.k8s.enterprisedb.io/v1beta1 kind: PGDGroup metadata: name: region-a spec: instances: 2 proxyInstances: 2 witnessInstances: 1 pgd: parentGroup: name: world create: true discovery: - host: region-a-group.pgd-group.svc.clusterset.local - host: region-b-group.pgd-group.svc.clusterset.local - host: region-c-group.pgd-group.svc.clusterset.local cnp: storage: size: 1Gi connectivity: dns: domain: "pgd-group.svc.clusterset.local" additional: - domain: alternate.domain - domain: my.domain hostSuffix: -dc1 tls: mode: verify-ca clientCert: caCertSecret: client-ca-key-pair certManager: spec: issuerRef: name: client-ca-issuer kind: Issuer group: cert-manager.io serverCert: caCertSecret: server-ca-key-pair certManager: spec: issuerRef: name: server-ca-issuer kind: Issuer group: cert-manager.io

!!! Important

The format of the hostnames in the discovery section differs from the single-cluster

example. That's because Submariner is being used to connect the OpenShift clusters, and Submariner uses the

<service>.<ns>.svc.clusterset.local domain to route traffic between the OpenShift clusters. region-a-group is the

name of the service to be created for the PGD group named region-a.

- Apply the

region-aPGD group YAML:

oc apply -f region-a.yaml -n pgd-group

- You can now switch context to

OpenShift Cluster Band create the second PGD group. The YAML for the PGD group in Cluster B is as follows. The only difference is themetadata.name.

apiVersion: pgd.k8s.enterprisedb.io/v1beta1 kind: PGDGroup metadata: name: region-b spec: instances: 2 proxyInstances: 2 witnessInstances: 1 pgd: parentGroup: name: world discovery: - host: region-a-group.pgd-group.svc.clusterset.local - host: region-b-group.pgd-group.svc.clusterset.local - host: region-c-group.pgd-group.svc.clusterset.local cnp: storage: size: 1Gi connectivity: dns: domain: "pgd-group.svc.clusterset.local" tls: mode: verify-ca clientCert: caCertSecret: client-ca-key-pair certManager: spec: issuerRef: name: client-ca-issuer kind: Issuer group: cert-manager.io serverCert: caCertSecret: server-ca-key-pair certManager: spec: issuerRef: name: server-ca-issuer kind: Issuer group: cert-manager.io

- Apply the

region-bPGD group YAML:

oc apply -f region-b.yaml -n pgd-group

- You can switch context to

OpenShift Cluster Cand create the third PGD group. The YAML for the PGD group is:

apiVersion: pgd.k8s.enterprisedb.io/v1beta1 kind: PGDGroup metadata: name: region-c spec: instances: 0 proxyInstances: 0 witnessInstances: 1 pgd: parentGroup: name: world discovery: - host: region-a-group.pgd-group.svc.clusterset.local - host: region-b-group.pgd-group.svc.clusterset.local - host: region-c-group.pgd-group.svc.clusterset.local cnp: storage: size: 1Gi connectivity: dns: domain: "pgd-group.svc.clusterset.local" tls: mode: verify-ca clientCert: caCertSecret: client-ca-key-pair certManager: spec: issuerRef: name: client-ca-issuer kind: Issuer group: cert-manager.io serverCert: caCertSecret: server-ca-key-pair certManager: spec: issuerRef: name: server-ca-issuer kind: Issuer group: cert-manager.io

- Apply the

region-cPGD group YAML:

oc apply -f region-c.yaml -n pgd-group

Now you can switch context back to OpenShift Cluster A and check the status of the PGD group there:

oc get pgdgroup region-a -n pgd-groupThe PGD group is in the phase

PGD - Waiting for node discovery.

After creating the PGD groups in each OpenShift cluster, which in turn creates the services for each node, you need to expose the services to the other OpenShift clusters. You can do this in various ways.

If you're using

Submariner, you can do it using the

subctl

command. Run the subctl export service command

for each service in the

pgd-group namespace that has a -group or -node suffix. You can do this by running the following bash

for loop on each cluster:

for service in $(oc get svc -n pgd-group --no-headers -o custom-columns="NAME:.metadata.name" | grep -E '(-group|-node)$'); do subctl export service $service -n pgd-group done

After a few minutes, the status shows that the PGD group is healthy. Once each PGD group is healthy, you can write

to the app database in either of the two data nodes: region-a or region-b. The data is replicated to the

other data node.